Pagoda Blog

How to Differentiate Between a Deepfake and Real Content

March 7, 2024

|

|

The idea that someone could make you say or do things against your will and completely outside your control is the stuff of disturbing sci-fi novels. But it’s also now reality with readily available AI technology. Since 2014, we’ve had the technology to create “deepfakes”: AI-generated video or audio clips that feature virtual clones of a person’s voice or face saying or doing things they never did.

While the technology is old, the widespread availability of deepfake technology is new and it has advanced over the years to become incredibly convincing.

According to Yisroel Mirsky, an offensive AI researcher and deepfake expert at Ben-Gurion University, someone can now create a deepfake video from a single photo of a person and they can create a voice clip from only three to four seconds of audio. Fortunately, the most widely available deepfake technology requires closer to five minutes of audio and one to two hours of video.

Even though the software used by most cybercriminals requires these longer audio or video clips, the heavy use of social media platforms has made it relatively easy to find enough publicly available data to create a deepfake of almost anyone. This means that we need to be extra vigilant both when sharing and consuming content online.

The relatively easy access to tools that can create convincing deepfakes means that we need to be extra vigilant both when sharing and consuming content online.

So how exactly are deepfakes used to scam or blackmail their targets and how can you spot a deepfake and avoid falling prey to a scam?

How cybercriminals use deepfakesPerhaps the most commonly reported use of deepfakes is when a cybercriminal clones a celebrity’s voice and posts an audio or video clip online of the individual saying something offensive or controversial. Celebrities, politicians, influencers, journalists, and any other individual with hours of high-quality audio and video content publicly available is highly vulnerable to these types of attacks.

Unfortunately, even those of us who rarely post on social media may be at risk. Banks, for example, have started using voice recognition software as a form of identification. This software requires the user to answer a few prompts over the phone that are recorded and stored to act as an audio “fingerprint” to grant access to the user’s accounts.

The problem with this form of identification is it is also vulnerable to phishing attacks. If a scammer is able to uncover your mobile number and who you bank with, they can call you and impersonate a bank employee. They may ask you a few seemingly harmless questions in order to record samples of your voice. If you’ve published audio content online, they may simply use that as their source. After obtaining several minutes of audio data, they can call your bank’s automated teller and respond in a clone of your voice using AI technology in order to gain access to your bank accounts. A Vice journalist successfully broke into his own bank account using this approach.

Cybercriminals also use real-time deepfakes in order to engage in conversation with their target over the phone or a video call. A real-time deepfake can mimic the voice of a friend or colleague in real-time in a phone call. The caller sounds enough like the person you know to convince you to share sensitive information and fall prey to a scam. These deepfakes are especially dangerous as they are harder to spot than traditional phishing scams, in part because we’re not yet trained to be on the lookout for them.

Real-time deepfakes are commonly used in online romance scams but as the technology improves, the threats increase. A real-time deepfake was used to scam a CEO into transferring $243,000 into the cybercriminal’s account and was likely used to scam a grandmother out of $58,000 because she thought her grandson had called asking her to post bail.

We are already susceptible to falling prey to traditional email phishing scams because we tend to automatically trust requests that appear to come from an individual or organization we know and trust. When you add a believable voice or video to the request, it becomes that much harder to detect the scam.

So how can you spot a deepfake? Fortunately, the technology is still imperfect so if you know what to look for, you can often distinguish a deepfake from the real deal.

Ways to spot a deepfakeDeepfake technology has its flaws, so there are red flags to indicate that the person you’re watching or speaking with is actually an impersonation. Here’s what to look out for:

How to spot a video deepfakeIf it’s a video, look for certain behaviors that appear “off.” For example, the person blinks too often or too little; they have hair in the wrong spot; their head is too large or too small for their body; their eyebrows look out of place; their skin doesn’t match the person’s age; and if they’re wearing glasses, often the lenses don’t reflect light the way they should. If it’s a real-time video deepfake (not a recording), ask the person to turn their head or put a hand in front of their face. AI technology isn’t trained to perform these movements realistically so these simple actions can quickly reveal whether or not you’re speaking with a real person.

How to spot an audio-only deepfakeAudio-only deepfakes are, no doubt, harder to spot. Unfortunately, the AI technology has gotten disturbingly effective at mimicking people’s voices, including inflections and their unique speech cadences.

AI technology has gotten disturbingly effective at mimicking people’s voices, including inflections and their unique speech cadences.

For recorded deepfakes, the best defense is to double-check the validity of the content before accepting it as fact. This is especially important during elections, as deepfakes can be used to make politicians say anything the cybercriminal wants them to say. They may endorse a candidate they would never actually endorse or say they are against a hot-button policy that they are known for championing. In a recent example, a cybercriminals used AI voice-cloning software by ElevenLabs to create a deepfake of President Biden telling Democrats that they shouldn’t vote in the upcoming election.

If the audio deepfake is real-time, a good rule of thumb is to never share sensitive information with someone who calls you claiming to be your bank, someone from your work, or even a family member or close friend. It’s very rare that a personal or business connection will call asking for a money-transfer or for your social security number. Your bank should never call you and ask for your account, number, social security number, username or password, or any of these other pieces of confidential information. Instead, tell the caller that you’re going to hang up and call them back before further engaging in the conversation. (Note that this only works if you can look up the caller’s phone number. If the caller gives you a number to call back and they are indeed a deepfake, then you will be jumping right back into the scam.)

Navigating an online world with deepfakesLike all AI tools, deepfake technology is only going to get better. This means deepfakes will only become more ubiquitous and more of a threat. In fact, social media platforms like LinkedIn have seen an uptick in the number of scams using artificial intelligence to create fake accounts.

As an SMB owner, staying informed on the latest cybersecurity threats from deepfake technology is critical in order to mitigate the risk of falling prey to a scam. Today it’s still possible to differentiate between a deepfake video and an authentic video using the clues outlined above. It’s still possible to be duped by a deepfake, so remember to always verify the content in a questionable video with one or two other sources before believing what you see or hear.

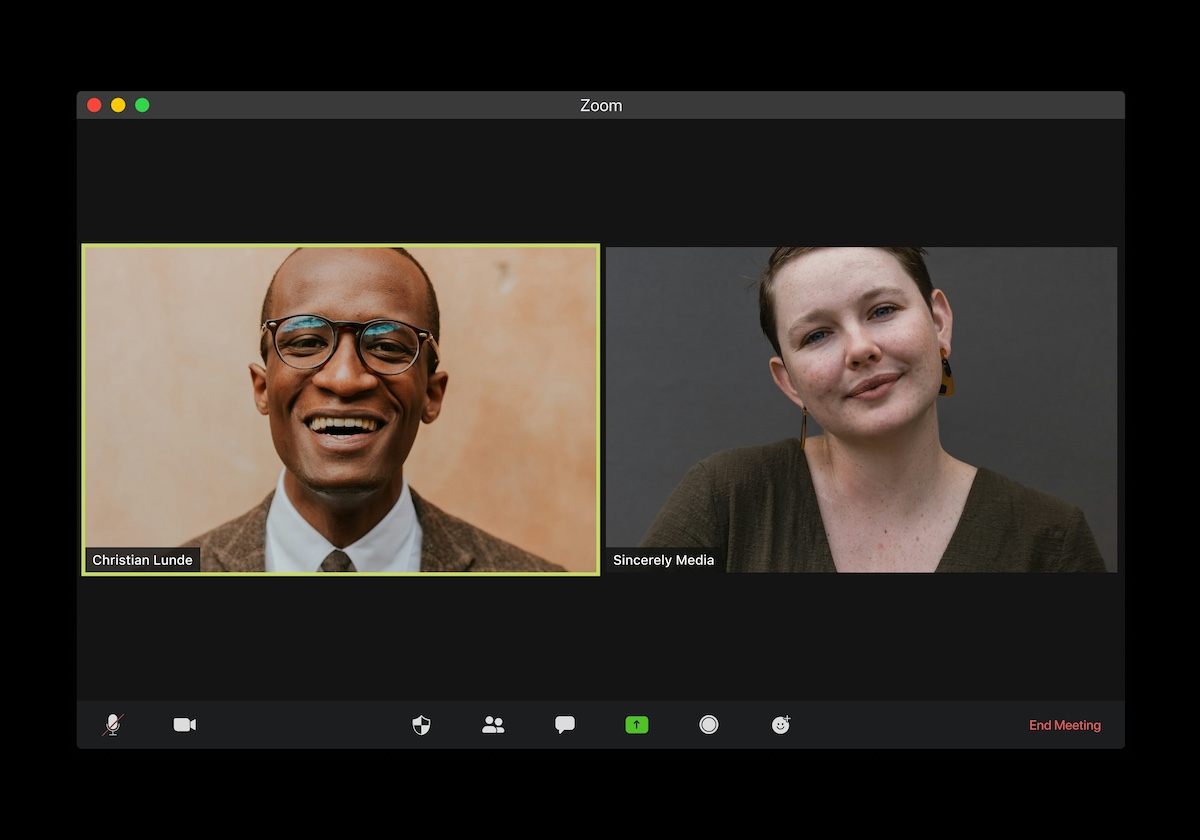

As you navigate the ever-evolving online world, guard your personal information and sensitive business information as well. Learn the risks of sharing too much personal information with AI, learn how to protect your business from identity theft, and make sure you have an incident response plan in place to mitigate the damage if your business is the target of an attack. Feature Photo by visuals on Unsplash

Did you know we have a weekly LinkedIn newsletter? Make sure to subscribe to IT Enlightenment for SMBs to get actionable IT advice and tech tips to set your business up for success every Tuesday.

Want to get more posts like these in your inbox? Sign up for the Pagoda newsletter and we’ll send you the occasional email with content that will sharpen your technical skills, from cybersecurity to digital marketing.

-------------------

About Pagoda Technologies IT services Based in Santa Cruz, California, Pagoda Technologies provides trusted IT support to businesses and IT departments throughout Silicon Valley, the San Francisco Bay Area and across the globe. To learn how Pagoda Technologies can help your business, email us at support@pagoda-tech.com to schedule a complimentary IT consultation. |

Return to Pagoda Blog Main Page |